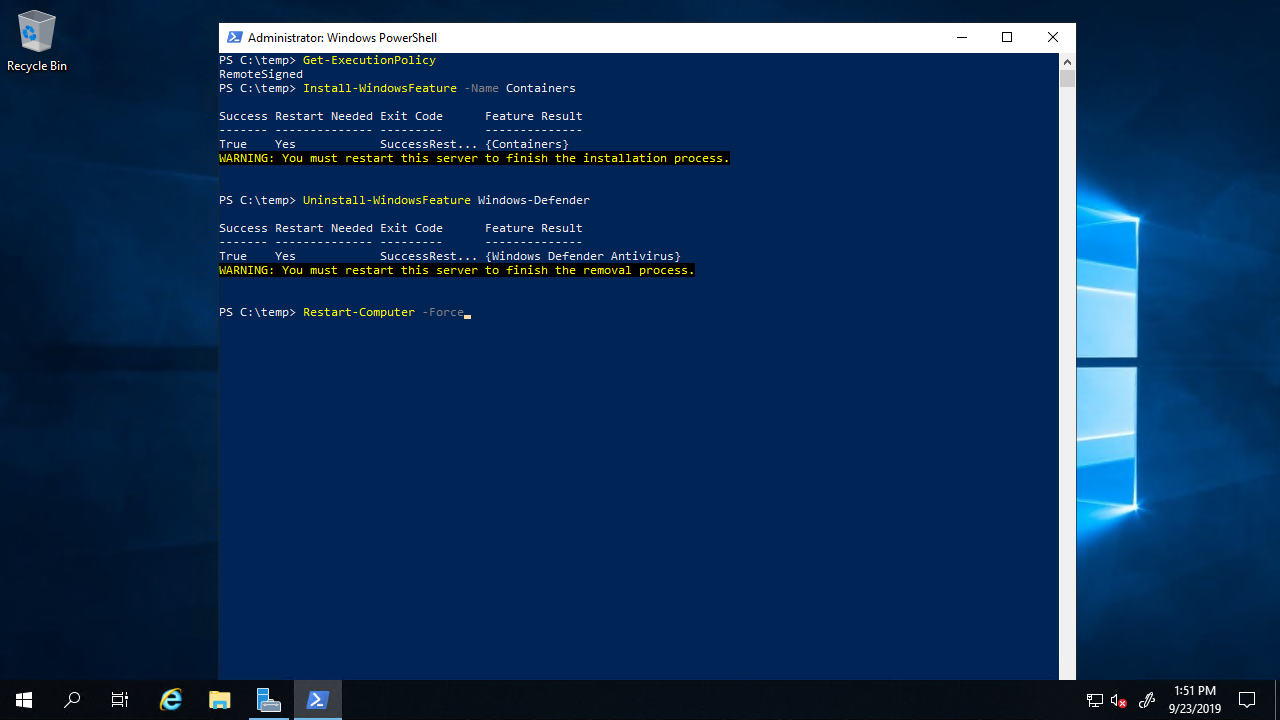

Install-WindowsFeature -Name Containers

Uninstall-WindowsFeature Windows-Defender

Restart-Computer -Force

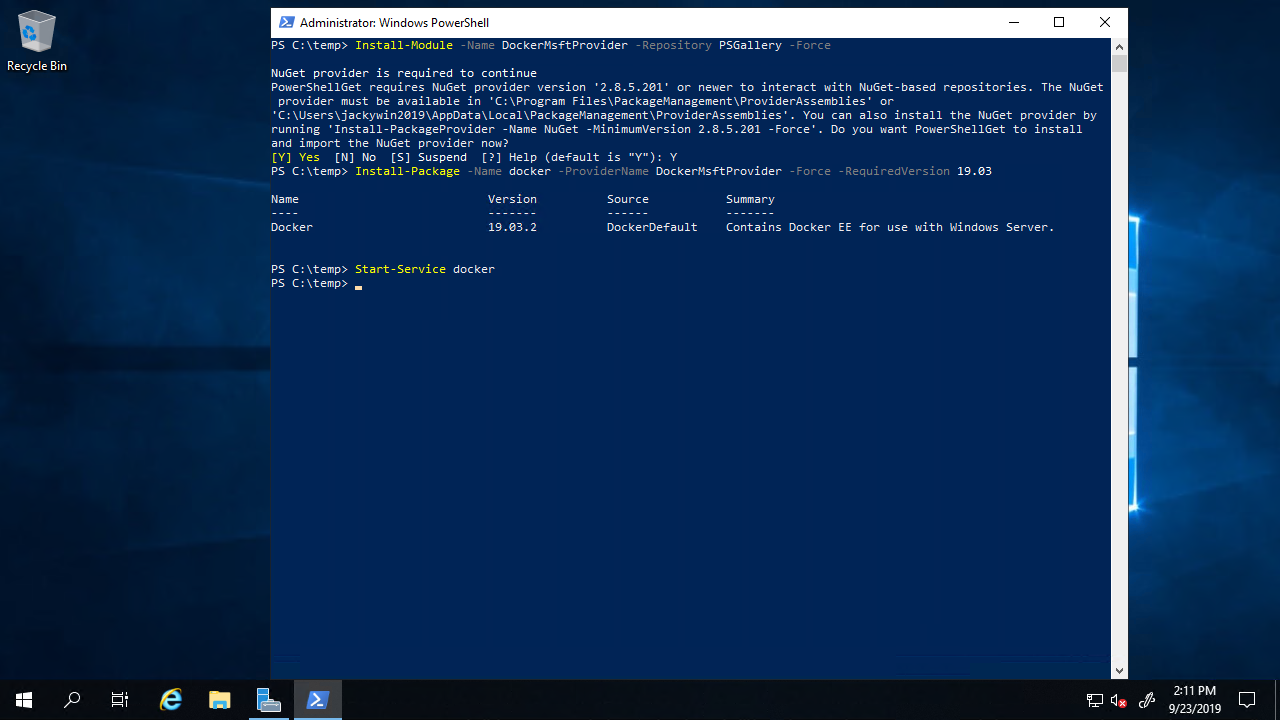

Install-Module -Name DockerMsftProvider -Repository PSGallery -Force

Install-Package -Name docker -ProviderName DockerMsftProvider -Force -RequiredVersion 19.03

Start-Service docker

docker pull mcr.microsoft.com/dotnet/core/samples:aspnetapp

docker run -it --rm -p 8000:80 --name aspnetcore_sample mcr.microsoft.com/dotnet/core/samples:aspnetapp

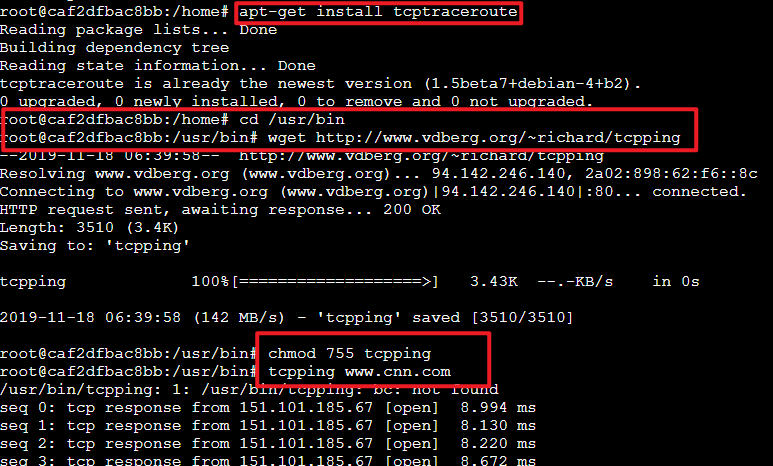

Azure Web App Linux install tcpping

To install tcptraceroute

1 | apt-get install tcptraceroute |

Download tcpping from the web.

1 | cd /usr/bin |

Using tcpping

1 | tcpping www.cnn.com |

HTH. By Jacky 2019-11-18

Previously you would need to write:

1 | using System; |

You can now write:

1 | using System; |

For more information:

https://docs.microsoft.com/en-us/dotnet/csharp/whats-new/csharp-7-1?WT.mc_id=ondotnet-c9-cxa#async-main

Enjoy. 2020-Jan.-3 By Jacky

1 | apt-get update |

1 | sqlcmd -S yourdbname.database.windows.net,1433 -U username -P 'YourPassword' -Q 'SELECT @@Version' |

原文: https://msdn.microsoft.com/en-us/magazine/mt793270?f=255&MSPPError=-2147217396

Azure App Service is considered an excellent Platform as a Service (PaaS), offering an application platform for developers to build Web, mobile and API applications. Its offerings range from simple marketing and digital presence applications to scalable e-commerce solutions and hyper-scale, customizable applications.

App Service is fully managed, which means no administrative tasks are required to manage underlining compute infrastructures (servers) on which your applications run. You don’t need to worry about the underlining server maintenance as the platform patches the OS and frameworks for you. Your application runs on virtualized servers, but you should only care for setting the maximum number of server instances on which you want your application to run. The platform handles scaling when your application needs additional compute resources and, at the same time, it handles the load balance traffic across multiple instances of your application.

While the App Service team is doing its best to hide any underlining implementation details, it’s good to be aware of how things work under the cover. This article covers the basic internal architecture of App Service (how the service is built and operates) and offers a few best practices for certain scenarios.

Global and Geo-Distributed Architecture

Cloud computing quickly scales and has endless capacity. Cloud scale can be explained as looking on a computer screen. Look from afar and you see a clear and smooth picture; when you take a closer look, you notice the image on the screen is comprised of many little pixels. The cloud, like an image, is comprised of many servers. App Service clusters bunches of servers into a single unit called a “scale unit” (or a “stamp”). There are many such scale units across the globe in Azure datacenters.

As part of Azure, App Service has a global footprint. In every Azure-supported region, you’ll find App Service scale units running customers’ workloads (applications) and sets of regional control units. Control units are transparent to the customer (until they malfunction) and considered part of the platform. There’s one special control unit that’s being used as a gateway for all management API calls. For example, when a customer makes a request to create a new application, either through the portal, command-line interface or directly through the Azure REST API, the request is routed to a central Azure endpoint (management.azure.com). The Azure Resource Manager, or ARM (bit.ly/2i6UD07), lets you work with different Azure resources in your application as a single group. The API defined by ARM lets you manage Azure resources. ARM doesn’t actually manage individual resources. Each service in Azure has its own management API implementation that is proxied by ARM. In the case of App Service, ARM forwards any App Service API calls to App Service Geo-Master.

The Geo-Master has context about all scale units worldwide. For example, when you create a new App Service application (or Web site), Geo-Master finds the most suitable scale unit for your application and then forwards the create request to the appropriate scale unit. The scale unit is now tasked with provisioning a new app and allocating any required resources. Figure 1 shows the flow of creating a new app.

Global Distribution of App Service Scale Units

Figure 1 Global Distribution of App Service Scale Units

Here’s the process to create a new app:

User makes a request to create a new site.

ARM makes sure user has access to the resource to allow the given operation (create in this case) and forwards the requests to App Service Geo-Master.

Geo-Master finds the best suitable scale unit for the user’s request and forwards the request.

The scale unit creates the new application.

Geo-Master reports success on the create request.

While it’s important to understand that App Service has many scale units, your application usually runs within a single App Service scale unit. Even when using Azure Traffic Manager to run in multiple regions, your application runs in two or more separate scale units. However, from the individual scale unit point of view, your app is constrained to a single scale unit.

What Is an App Service Scale Unit?

An App Service scale unit is a collection of servers that host and run your applications. A typical scale unit can have more than 1,000 servers. The clustering of servers enables economy of scale and reuse of shared infrastructure. The fundamental building block of App Service scale unit is an Azure Cloud Service deployment (remember, App Service first released as a preview in June 2012). Any given scale unit is autonomous and can operate on its own.

Scale Unit Main Building Blocks

The main functionality of scale unit is to host and run customer applications. Applications run on Windows servers and are referred to as Web Workers or Workers for short. The majority of servers in a given scale unit are Workers. However, a unit of scale includes several additional support servers required to achieve the functionality provided by App Service. Support servers have roles and each role is deployed on multiple instances for redundancy and scale.

Front End

The front end is a layer seven-load balancer, acting as a proxy, distributing incoming HTTP requests between different applications and their respective Workers. Currently, the App Service load-balancing algorithm is a simple round robin between a set of servers allocated for a given application.

Web Workers

Workers are the backbone of the App Service scale unit. They run your applications.

With App Service, you can choose how you want to run your application. You can select running your application on shared or dedicated servers. You do so by selecting an App Service Plan. An App Service Plan defines a set of capabilities, features and server allocations. A Shared Worker hosts applications from multiple different customers where Dedicated Workers are guaranteed to run one or more applications of a single customer. There are several dedicated server types and sizes from which you can choose. The larger the server size, the more CPU and memory resources are available for allocated applications. The App Service Plan defines the amount of pre-allocated servers for your application.

An App Service scale unit has several pools of Workers pre-provisioned and ready to host your applications, as illustrated in Figure 2, Section 1. When you define your dedicated App Service Plan to a size of two servers, App Service allocates two servers, as illustrated in Figure 2, Section 2. Next, when you scale out your App Service Plan—for example, adding two more Workers—available Workers are allocated from a pool of ready-to-go Workers, as illustrated in Figure 2, Section 3. Because Workers are already pre-provisioned and warm, all that needs to happen is for your application to get deployed on the Worker. Once the app is deployed, the Worker is inserted into the rotation and the front end can allocate traffic to it. This whole process usually takes a few seconds.

Server Application Process in App Service Scale Unit

Figure 2 Server Application Process in App Service Scale Unit

Figure 2, Section 4 shows multiple App Service Plans, identified as multi-colored rectangles, each representing an App Service Plan that can belong to multiple customers.

File Servers

Any app needs storage to hold content such as HTML, .js files, images, or code files, and any other content required for the application to work. A file server mounts Azure Storage blobs and exposes them as network drives to the Worker. A Worker, in return, maps such network drives as local, allowing any application running on any given Worker to use the “local” drive, just like you would expect if the application were running on a server using its local disk. Any file-related read/write operation performed by the application passes through a file server.

API Controllers

API controllers can be viewed as an extension to App Service Geo-Master. While the Geo-Master is aware of all App Service applications across all scale units, it’s the API controller that actually performs the management operation that affects your applications. Geo-Master delegates API fulfilment to a given scale unit via the API controllers. For example, when Geo-Master passes an API call to create a new application, the API controller orchestrates the required steps to create the application at the scale unit. When you use the Azure portal to reset your application, it’s the API controller that notifies all Web Workers currently allocated to your application to restart your app.

Publishers

Azure App Service supports FTP access to any application. Because app content is stored in Azure Storage blobs and mapped by file servers, App Service has a Publisher role to expose FTP functionality. The Publisher role lets customers use FTP to access their application content and logs.

It’s important to note that there are many other ways to deploy applications other than FTP. One of the common deployment methods is Web Deploy (from Visual Studio) or any of the supported Continues Deployment options such as Visual Studio Release Manager or GitHub.

SQL Azure

Each App Service scale unit uses Azure SQL Database to persist application metadata. Each application that’s assigned to a given unit of scale has a representation in a SQL Database. The SQL Database is also used to hold runtime information about applications.

Data Role

All roles require data found in the database to operate. As examples: a Web Worker needs site configuration information when launching an app; front ends need to know which servers are assigned to run a specific application in order to correctly forward HTTP requests to the appropriate servers; and controllers read and update data from the database based on API calls made by customers. The data role can be described as a cache layer between SQL Database and all other roles in a given unit of scale. It abstracts the data layer (SQL Database) from the rest of the roles, improving scale and performance, as well as simplifying software development and maintenance.

Less-Than-Obvious Best Practices

Now that you know how Azure App Service is built, we’ll review several tips and tricks from the App Service team. These are hands-on lessons learned by App Service engineering teams from numerus customer engagements.

Controlling Density

A majority of customers run a low number (less than 10) of applications per App Service Plan. However, there are many scenarios where customers are running many more applications. It’s important to prevent accidentally over saturating the compute capacity of the underlying servers.

Let’s start with the basic hierarchy of applications and their compute resources. There are two Web apps and one mobile back-end app associated with this App Service Plan. The plan is set to two servers.

By default, all applications contained in a given App Service Plan run on all the available compute resources (servers) allocated to that Service Plan. All three applications run on both servers. In the simple case where an App Service Plan has a single server, it’s simple to understand: All of the applications in the App Service Plan run on a single server.

It’s somewhat less intuitive as to what happens when there are multiple compute resources allocated to your App Service Plan. As an example, if a single App Service Plan has 10 compute resources, then every app in the application service will run on every compute resource. If there are 50 applications in the App Service Plan, all 50 will be running on the first server, and the same 50 will be running on the second server, and so on, to the 10th server, which will also be running all 50 apps.

For some scenarios, where your application requires a lot of compute resources, usually to handle an increase in incoming HTTP requests, you’ll want to have your application running on all available servers. However, sometimes this is an unintended consequence that occurs when an App Service Plan is scaled out from one server to many. If an App Service Plan is under CPU and/or memory pressure due to a large number of applications running in it, increasing the number of servers in that App Service Plan won’t address the problem.

Instead, consider the traffic distribution for each application and separate the long tail of low-volume apps into a separate App Service Plan. Consider running high-volume apps in separate App Service Plans. Using the 50 app example earlier, after analyzing traffic patterns you might end up with the following allocation to App Service Plans and compute resources:

40 low-volume applications remain in a single App Service Plan running on one compute resource.

Five mid-to-low volume applications use a second App Service Plan running on one compute resource.

The remaining five applications are found to have high-volume usage. Each application is deployed onto a separate App Service Plan. An auto-scale rule is set on each App Service Plan to use a minimum of one compute resource, with rules to scale in/out based on CPU and memory utilization.

The net result of this approach is that the 50 apps use seven compute resources at a minimum, with the five high-volume applications each having the necessary headroom to independently scale out on-demand based on load.

Per-App Scaling

Another alternative for running large numbers of applications in a more efficient manner is to use the per-app scaling feature of Azure App Service. The document at bit.ly/2iQUm1S covers per-app scaling in detail. Per-App scaling lets you control the maximum number of servers allocated to a given application, and you can do so per application. In this case, an application will run on the defined maximum number of servers and not on all available servers.

Using the earlier 50 app example, with per-app scaling enabled for the App Service Plan, all 50 apps can be assigned to the same App Service Plan. Then, the scaling characteristics of individual apps can be modified:

40 low-volume applications set to run on a maximum of a single server each.

Five mid- to low-volume applications set to run on a maximum of two servers each.

Five remaining high-volume applications set to run on a maximum of 10 servers.

The underlying App Service Plan can start out with a minimum of five servers. And then auto-scale rules can be set to scale out as needed based on memory pressure vs. CPU.

Azure App Service will automatically handle assignment of applications to compute resources. The service will also automatically handle constraining the maximum number of running application instances based on the number of Workers setting for each individual application. As a result, increasing the numbers of Workers in the App Service Plan will not result in 50 app instances spinning up on each new available virtual machine.

To summarize, per-app scaling “packs” applications onto the underlying servers associated with an App Service Plan. Per-app scaling does not result in every application running on every compute resource associated with an App Service Plan as described earlier.

Application Slots

App Service has a feature called “deployment slots” (bit.ly/2iJzv3f). In a nutshell, a deployment slot enables you to have another application (slot) other than your production app. It’s another application that you can use to test new code prior to swapping into production.

Application slots is among the most used feature in App Service. However, it’s important to understand that each application slot is also an application in its own right. This means application slots can have custom domains associated with them, different SSL certificates, different application settings and so on. It also means the assignment of an application slot to an App Service Plan can be managed separately from the App Service Plan associated with the main production slot.

By default, each application slot is created in the same App Service Plan as the production slot. For low-volume applications, and/or applications with low CPU/memory utilization, this approach is fine.

However, because all applications in an App Service Plan run on the same servers, this means by default all of an Application’s Slots are running on the exact same underlying server as production. This can lead to problems such as CPU or memory constraints if you decide to run stress tests against non-production slots, which run on the same server as your production application slot.

If resource competition is scoped just to scenarios such as running load tests, then temporarily moving a slot to a different App Service Plan, with its own set of servers, will do the following:

Create additional App Service Plan for the non-production slots. Important note: Each App Service Plan needs to be in the same resource group and same region as the production slot’s App Service Plan.

Move a non-production slot to a different App Service Plan and, thus, a separate pool of compute resources.

Carry out resource-intensive (or risky) tasks while running in the separate App Service Plan. For example, load tests can be run against a non-production slot without negatively impacting the production slot because there won’t be any resource contention.

When the non-production slot is ready to be swapped into production, move it back to the same App Service Plan running the production slot. Then the slot swap operation can be carried out.

Deploying to Production with No Downtime

You have a successful application running on an App Service Plan and you have a great team to make updates to your application on a daily basis. In this case, you don’t want to deploy bits directly into production. You want to control the deployment and minimize downtime. For that you can use your application slots. Set your deployment to the “pre-production” slot, which can be configured with production setting, and deploy your latest code. You can now safely test your app. Once you’re satisfied, you can swap the new bits into production. The swap operation doesn’t restart your application and in return the Controller notifies the front-end load balancer to redirect traffic to the latest slots.

Some applications need to warm up before they can safely handle production load—for example, if your application needs to load data into cache, or for a .NET application to allow the .NET runtime to JIT your assemblies. In this case, you’ll also want to use application slots to warm up your application prior to swapping it into production.

We often see customers having a pre-production slot that’s used to both test and warm up the application. You can use Continuous Deployment tools such as Visual Studio Release Manager to set up a pipeline for your code to get deployed into pre-production slots, run test for verification and warm all required paths in your app prior to swapping it into production.

Scale Unit Network Configuration

The App Service scale unit is deployed on Cloud Service. As such, it implies some network configuration and functionality that you might become familiar with to fully understand any network effects on your apps.

The scale unit has a single Virtual IP (VIP) address exposed to the world. All applications allocated to a given unit of scale are serviced through this VIP. The VIP is the representation of the Cloud Service on which the App Service scale unit is deployed.

App Service applications only serve HTTP (port 80) and HTTPS (port 443) traffic. Each App Service application has default built-in HTTPS support for the azurewebsites.net domain name. App Service supports both Server Name Indication (SNI) and IP-based Secure Sockets Layer (SSL) certificates. In the case of IP-based SSL, a given application is allocated a dedicated IP address for only inbound traffic, which is associated with the Cloud Service deployment. Please note: Front ends terminate SSL connection for all HTTPS requests for all applications and any type of certificate. The front end then forwards the request to the designated worker for a given application.

Public VIP

By default, there’s a single public VIP for all inbound HTTP traffic. Any app is addressable to a single VIP. If you have an app on App Service, try running nslookup command (from Windows or PowerShell console) and see the result. Here’s an example:

#1 PS C:\> nslookup awesomewebapp.azurewebsites.net

#2 Server: UnKnown

#3 Address: 10.221.0.3

#4 Non-authoritative answer:

#5 Name: waws-prod-bay-001.cloudapp.net

#6 Address: 168.62.20.37

#7 Aliases: awesomewebapp.azurewebsites.net

Here’s a review of the output of awesomewebapp.azurewebsites.net:

Line #1 runs an nslookup querying resolution for awseomwebapp.azurewebsites.net.

Line #5 shows the domain name of the scale unit running awseomwebapp app. You’ll notice that an App Service scale unit is deployed on Azure Cloud Service (by the cloudapp.net suffix). WAWS stands for Windows Azure (when Azure was still called Windows) Web sites (the original name of App Service).

Line #6 shows the VIP of the scale unit. All the applications that are hosted and running on waws-prod-bay-001 (Line #5) are addressable on the public VIP designated.

Line #7 shows all domain aliases mapped to the same IP address.

Outbound VIPs

Most likely your application is connected to other Azure and non-Azure services. As such, your application makes outbound network calls to endpoints not on the scale unit of your application. This includes calling out to Azure services such as SQL Database and Azure Storage. There are up to five VIPs (the one public VIP and four outbound dedicated VIPs) used for outbound communication. You can’t choose which VIP your app uses, and all outbound calls from all apps in scale unit are using the five allocated VIPs. If your application uses a service that requires you to whitelist IPs that are allowed to make API calls into such a service, you’ll need to register all five VIPs of the scale unit. To view which IPs are allocated to outbound VIPs for a given unit of scale (or for your app from your perspective) go to the Azure portal, as shown in Figure 3.

App Service Application Outbound IP Address View in Azure Portal

Figure 3 App Service Application Outbound IP Address View in Azure Portal

If you’re looking for a dedicated set of inbound and outbound IPs, you can explore this by using a fully isolated and dedicated App Service Environment at bit.ly/2hVRSlR.

IP and SNI SSL

App Service supports IP-based SSL certificates. When using IP-SSL, App Service allocates to your application a dedicated IP address for only in-bound HTTP traffic.

Unlike the rest of Azure dedicated IP addresses, the IP address with App Service via IP-SSL is allocated as long as you opt to use it. You don’t own the IP address and when you delete your IP-SSL, you might lose the IP address (as it might be allocated to a different application).

App Service also supports SNI SSL, which doesn’t require a dedicated IP address and is supported by modern browsers.

Network Port Capacity for Outbound Network Calls

A common requirement for applications is the ability to make outbound network calls to other network endpoints. This includes calling out to Azure internal services such as SQL Database and Azure Storage. It also includes cases where applications make calls to HTTP/HTTPS API endpoints—for example, calling a Bing Search API or calling an API “application” that implements back-end business logic for a Web application.

In almost all of these cases, the calling app running on Azure App Service is implicitly opening a network socket and making outbound calls to endpoints that are considered “remote” from an Azure Networking perspective. This is an important point because calls made from an app running on Azure App Service to a remote endpoint rely on Azure Networking to set up and manage a table of Network Address Translation (NAT) mappings.

Creating new entries in this NAT mapping takes time and there’s ultimately a finite limit on the total number of NAT mappings that can be established for a single Azure App Service scale unit. Because of this, App Service enforces limits on the number of outbound connections that can be outstanding at any given point in time.

The maximum connection limits are the following:

1,920 connections per B1/S1/P1 instance

3,968 connections per B2/S2/P2 instance

8,064 connections per B3/S3/P3 instance

64K max upper limit per App Service Environment

Applications that “leak” connections invariably run into these connection limits. Applications will start intermittently failing because calls to remote endpoints fail, with the failures sometimes correlating closely to periods of higher application load. You’ll frequently see errors like the following: “An attempt was made to access a socket in a way forbidden by its access permissions aaa.bbb.ccc.ddd.”

The likelihood of running into this problem can be substantially mitigated with a few best practices:

For .NET applications using ADO.NET/EF, use database connection pooling.

For php/mySql, use persistent database connections.

For Node.js applications making outbound HTTP/HTTPS calls, configure keep-alives so that outbound connections are reused. This configuration is described at bit.ly/2iGrcoo.

For .NET applications making outbound HTTP/HTTPS calls, pool and reuse instances of System.Net.Http.HttpClient or use Keep-alive connections with System.Net.HttpWebRequest. Note: Remember to increase the System.Net.ServicePointManager.DefaultConnectionLimit because you’ll otherwise be limited to two concurrent outbound connections to the same endpoint.

There are additional limitations imposed by the App Service Sandbox. These are lower-level limits and constraints for Azure App Service and you can read about them in more detail at bit.ly/2hXJ6lL.

Wrapping Up

Azure App Service provides a rich PaaS offering for Web, mobile and API applications. While the service has a lot of moving internal parts, these are abstracted away so that developers can concentrate on writing great apps while the service handles the complexities of running those apps at global scale.

Many of the best practices for App Service revolve around application scaling. Having a good understanding of how applications map to Web Workers in an App Service Plan is important as you increase the number of your apps running on the service, while still maintaining an optimal scaling configuration.

Given the cloud-first world we operate in, Azure and Azure App Service are always constantly evolving at a rapid pace. Many new innovations are expected in 2017.

Scale Unit Components

It might seem as if scale unit components are highly dependent on each other. However, by design, they’re loosely coupled. An application that’s currently running (actively serving HTTP traffic) on a given Web Worker can continue to serve HTTP traffic even if other roles in the scale unit are malfunctioning.

For example, a publisher not working properly might hinder FTP access, but that doesn’t affect an application’s HTTP traffic or other deployment options. If the controller has a bug preventing creation of new applications, it doesn’t mean apps already assigned to the scale unit stop working.

Yochay Kiriaty is a principal program manager on the Microsoft Azure team, specifically driving Web, mobile, API and functions experiences as part of the Azure App Service Platform. Kiriaty has been working with Web technologies since the late 90s and has a passion for scale and performance. You can contact him at yochay@microsoft.com and follow him on Twitter: @yochayk.

Stefan Schackow is a program manager on the Azure App Services team who has worked on the Web app cloud offering since its earliest days. In Azure, he leads a team of program managers who work on the development and deployment of Azure App Service, as well as the development of Microsoft’s on-premises/cloud hybrid products (Azure Pack and Azure Stack). He can be contacted at stefsch@microsoft.com.

Thanks to the following Microsoft technical experts for reviewing this article: Eduardo Laureano and Nir Mashkowski

1 | PS C:\temp> New-AzResourceGroupDeployment -ResourceGroupName "jackywinupdate1rg" -TemplateFile "C:\Users\jchiou\Downloads\azuredeploy.json" |

1 | { |

1 | # Result: |

FYI.

https://docs.microsoft.com/en-us/archive/blogs/jchiou/

HTH. 2019-July-1 by Jacky Chiou